Deploy to AWS

All of our applications are deployed into a Kubernetes cluster running in AWS. We use Terraform to write our account and application infrastructure which, when deploying most applications, consists of Kubernetes config files, Route 53 entries, and IAM policies/roles.

Architecture Overview

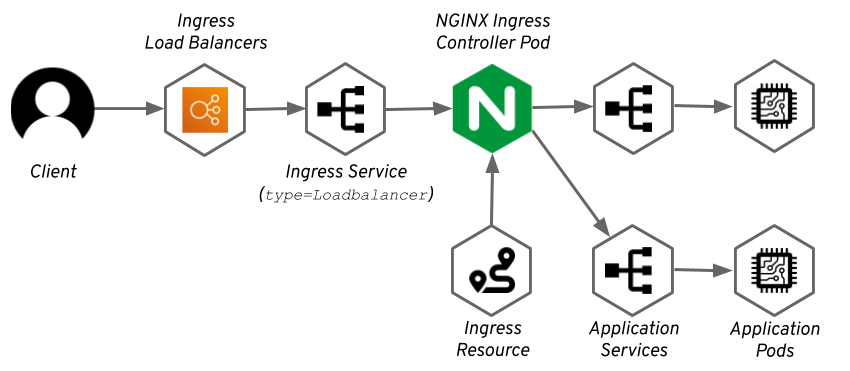

All traffic coming into kubernetes cluster goes through an NLB that points to a NGINX Ingress Controller pod running in the cluster. Part of deploying an application is creating an ingress that causes the NGINX Ingress Controller to update it’s config then and route traffic to the service associated with a pod. When a public URL is defined (part of our deployment modules, which we’ll discuss below), a Route 53 entry is created that points to the NLB.

We use AWS Parameter Store to store application secrets.

Deployment guide

Requirements

-

A text editor

-

Have the Terraform CLI installed

-

Have AWS API credentials configured/saved. You can do this by installing the AWS CLI and running

aws configure-

For BYU employees, we have a tool to help us to log into our AWS accounts. Install it and run

awslogin.

-

Write Infrastructure

We have a few modules to simplify most of the code for a deployment. The main decision you need to make is whether your application needs permanent storage or not. If it does, you’ll use the Kubernetes StatefulSet module. If not, you’ll use the Kubernetes Deployment module. They are pretty much the same, but the StatefulSet module adds additional options regarding storage ("Storage" in this case is an EBS Volume). This guide will create a StatefulSet, but creating a Deployment is pretty similar. You can learn more about Deployments and StatefulSets in Kubernetes' concepts documentation.

For the av-control-api, all of the terraform for the core API, as well as the driver servers, is in terraform/main.tf. If you are trying to deploy a different application, you should create a terraform folder at the root of the repo, with a main.tf file in it.

In every terraform configuration, you need to add the following block to tell terraform where it should store and load infrastructure state:

terraform {

backend "s3" {

bucket = "terraform-state-storage-586877430255" (1)

lock_table = "terraform-state-lock-586877430255" (1)

region = "us-west-2"

key = "SOME-UNIQUE-KEY.tfstate" (2)

}

}| 1 | These values come from BYU’s configuration here. They will be different if you are deploying to a different AWS account. |

| 2 | Replace SOME-UNIQUE-KEY with something unique across all terraform configurations deployed into your account. |

After configuring the terraform backend, you need to set up the aws the kubernetes providers. For the kubernetes provider, the cluster endpoint is required, so we have stored the value in AWS Parameter Store. We’ll talk more about pulling values from AWS Parameter store below.

provider "aws" {

region = "us-west-2"

}

data "aws_ssm_parameter" "eks_cluster_endpoint" {

name = "/eks/av-cluster-endpoint"

}

provider "kubernetes" {

host = data.aws_ssm_parameter.eks_cluster_endpoint.value

}Next, we’ll pull any secrets that your application requires from AWS Parameter Store. To put a secret into Parameter Store:

-

Log into AWS

-

Go to

Systems Manager -

Select

Parameter Storeon the left sidebar -

Click

Create Parameter, and enter the name/value of the secret.-

If the secret will be used by multiple applications, prefix its name with

/env/ -

If the secret will only be used by your applications, prefix its name with

/env/<application-name>/

-

Once all of your secrets are in Parameter Store, pull all the required values into terraform by using blocks like these:

data "aws_ssm_parameter" "secret_name" {

name = "/env/<application-name>/<secret-name>"

}Now that you have all of your secrets, you can use our terraform modules to create your application.

module "ui_prd" {

source = "github.com/byuoitav/terraform//modules/kubernetes-statefulset"

// required variables

name = "<application-name>" (1)

image = "docker.pkg.github.com/byuoitav/ui/ui"

image_version = "v0.5.0"

container_port = 8080 (2)

repo_url = "https://github.com/byuoitav/ui"

storage_mount_path = "/opt/ui" (3)

storage_request_size = "25Gi"

// optional variables

image_pull_secret = "github-docker-registry" (4)

public_urls = ["roomcontrol.av.byu.edu"] (5)

container_env = { (5)

DB_USERNAME = data.aws_ssm_parameter.db_address.value

}

container_args = [ (6)

"--port", "8080",

"--log-level", "2",

"--some-path", "/opt/ui",

"--some-secret", data.aws_ssm_parameter.secret_name.value

]

ingress_annotations = { (7)

"nginx.ingress.kubernetes.io/proxy-read-timeout" = "3600"

}

}| 1 | Put -dev at the end of name if this is the dev version of it. |

| 2 | The port that your application runs on. |

| 3 | Where the EBS volume will be mounted. This option and storage_request_size are not present for a deployment. |

| 4 | This is the name of a kubernetes secret of type kubernetes.io/dockerconfigjson. This is only required if you are pulling an image from a private docker registry. |

| 5 | A list public hosts to reach your application. If left empty or omitted, the application will only be accessible from within the cluster. |

| 6 | Defines environment variables for your application. |

| 7 | Defines arguments passed to your application. |

| 8 | Annotations added to the Kubernetes Ingress, which will affect the NGINX configuration. See options here. |